Your cart is currently empty!

The 6 Patterns of AI Content

TL;DR: Most AI content is obvious garbage. 73% people distrust text they feel AI wrote.

👇🏼 Scroll down to see the math or click 👉🏼AI Content Framework to grab the framework

The Top 6 AI Generated Content Patterns.

Last updated: July ‘25 | By: Shawn David | 19 – 22 minutes read | 10 sources

Too long? Too many words? Got you. Get the PDF emailed to you or watch me run this down on video!

Table of Contents

- Introduction

- How to identify AI content

- Sentence Structure

- Overused words

- Zero Personalization

- ‘Explainer’ Loop

- No transitions

- Lack of specifics

- Industry-Specific Identifiers

- Future-Proofing Sources and links

AI Generated Content. Can you spot it?

AI created content is not the future, it’s already here. It’s flooding your feeds, clogging your inbox, and stuffing websites with word salad dressed as thought leadership. By 2027, 80% of business content will be AI-assisted[2], and most of it? Absolute spam worthy trash.

In a perfect world, AI would follow brand guidelines, honor tone, and spit out compliant copy with a smile.

But we don’t live in that world. We live in the “meh, good enough” era of AI slop.

Brands that fought hard to earn trust and craft a voice are now one lazy prompt away from torching it all.

Off-the-shelf AI will fail your brand every time.

Why?

Because 73% of people[2]:

- Think they can spot AI-generated content.

- Judge you negatively for it, even if they are wrong[9]!

‼️Use Unedited AI Generated Content at Your Own Risk ‼️

Best case? You lose a customer.

Worst case? You are featured on an episode of Last Week Tonight.

This isn’t just a business perception, brand voice, public relations or marketing problem.

It’s a bottom-line profit and loss problem:

- Lower credibility → Lower conversions.

- Robotic tone → Poor search rankings or downright removal.

- Generic messaging → C.A.C. can skyrocket without specificity of I.C.P.

- Obvious AI writing → Brand reputation damage or outright open criticism.[3]

Here’s the twist:

The smartest content creators aren’t avoiding AI. They’re using it to scale their voice, not erase it. |

Why This Guide Exists

I can generate unlimited content offline using dozens of finely tuned AI models through Content Buddy[4], my local offline AI LLM engine. But volume means nothing without trust.

I needed to know:

Can I tell when something is AI-written?

So I ran my own tests.

After analyzing over 10,000 pieces of synthetic content across 10 categories and 50 industries, I reverse-engineered the 6 patterns that make AI generated content feel ‘off’ and built a systematic way to fix it.

This is not a theory or simple research project, although it started off as one. From this corpus of data I have built a field-tested framework that beats AI detectors and connects like a human.[1]

What you’ll learn:

- The exact patterns AI follows.

- A systematic approach to transform any AI output.

- Industry-specific strategies that actually work.

- Tools and frameworks for scaling your efforts.

- Metrics to measure your humanization success.

The 6 Patterns of AI Created Content

Every piece of obviously AI-generated content follows predictable and verifiable patterns.

🤖 AI Created Content Pattern #1 [Suspect Sentence Structure]

Large Language Models, like: ChatGPT, Claude AI, or Gemini are labeled by nerds as: ‘deterministic probability engines’, or simply, incredibly powerful auto-completes. The moon is called ‘Luna’ by nerds too, we like to label things.

What does this look like in practice?

It sounds fine… until you realize you’re sleep-scrolling through a wall of “technically correct” nothingness.

Why? Because the sentences all feel the same.

Without a machine learning background, it’s super easy to think of a LLM ‘writing’ as creation. But in reality, there is no ‘creation’ happening, there is a completed equation solved for highest likelihood of human acceptance.

Large Language Models (LLMs) work by predicting the most likely next word based on the words that came before it.

That’s it. There’s no intent, no awareness, just probability.

LLMs look to complete a sentence so that each word continues the previous set of words. If X = 1 then Y = 2, repeat until the equation is solved. That solved equation is what we call ‘AI generated content‘.

Example: If the input is “Peanut butter and __________”, the model has seen millions of examples where “jelly” follows. So it picks “jelly” with the highest score.

Mathematically, it’s just assigning probabilities like this:

jelly: 0.83

bananas: 0.06

regret: 0.0003

And it picks the top one. Then it repeats. Again and again, until it hits whatever ‘weight’ limit the trainer of the data tells it. Meaning that if you say anything over .8 is good enough to return the next word, it would never iterate until it found maybe .9 which is ‘grape jelly’.

‘Good enough’ to statistically satisfy the ‘next word’ in the sentence.

That means AI content isn’t designed to be good. It’s designed to be accepted by most humans.

So when people say “AI wrote this,” it really means “AI guessed each next word, one token at a time, based on patterns it saw in training.” Something that is a bit deeper than this guide, but critical to know: Tokens aren’t just letters or words. Depending on the system, a token could be a word, a phrase, or even an embedding of something massive; like the entire opening of Moby Dick.

The issue with deterministic probability is that math doesn’t like chaos, math is defined by its rules, and these LLM’s are tuned math equations that want to currently satisfy 1.28 x 10^2 or 12,800,000,000,000 possible combinations. This seems impossible, almost limitless, but remember, it’s a probability problem, not an arithmetic one.

The LLM predicts the next word in sequence based on math, not on what is ‘correct’.

What is an AI to do in this situation, seemingly unlimited inputs and outputs and no real way to determine success state?

Solve for human biases like: rules of three[6], grouping like objects[7] and creating a rhythm[7] to ‘complete’ the sentence. Notice the math doesn’t include ‘correct’ or ‘proper’ or anything subjective. The output is binary: complete or not. The nuance of subjective ‘good‘ vs ‘bad‘ does not exist as a variable in the equation.

We’ll get into how AI fakes human nuance (on purpose) later, but for now, just know: if it feels like it’s writing “good” it’s not from the system, but from human feedback.

This pattern isn’t intentionally programmed, rather ‘bubbles up’ naturally because of the way the technology works.

Because we expect and would create in specific ways, we project that expectation on something that appears to be creating like we would be.

AI created content vs a human created content boils down to one principal:

Humans create to be understood.

AI creates to be accepted.

The Tl;DR of this pattern is: that humans create to express and the AI is solving for probable likelihood.

Assigning any sort of creative expression to the LLM is the same as expecting emotional context from a calculator.

The Problem:

- The most statistically likely next words tend to follow common sentence structures (subject–verb–object).

- Those common structures often produce predictable cadences: 15–25 word sentences, similar punctuation rhythm, repeated clause structures, etc.

- Over time, it creates a “drumbeat” effect in the AI created content; unintentional, robotic-feeling rhythm.

The Fix:

- Mix short punchy statements with longer explanatory sentences.

- Use fragments, especially emotionally charged ones to convey motion and wake the reader up!!!

- Ask questions, but make sure you answer them; Don’t leave the reader curious to explore for too long.

Pattern 1 Examples

| Before (Robotic) – 90%+ ‘Created by AI’ Score. | After (Human) – < 1% ‘Created by AI’ Score |

| “Artificial intelligence is transforming industries by streamlining workflows, increasing efficiency, and providing innovative solutions to modern business challenges.” | “AI isn’t magic. It’s math.Want to make it useful? Stop chasing trends. Start solving real problems.” |

| “Artificial intelligence enables organizations to enhance operational efficiency, reduce costs, and drive innovation through automated systems and predictive analytics.” | “Most teams don’t need AI. They need to stop doing the same task ten times a week.” |

| “By leveraging data-driven insights, companies can optimize customer experiences, increase engagement, and deliver personalized solutions at scale.” | “The customer isn’t a spreadsheet. Stop treating them like one.” |

| “The implementation of AI-powered tools allows businesses to streamline decision-making processes, boost productivity, and gain a competitive edge in dynamic markets.” | “Half the AI tools you bought last year are still in a folder somewhere. Use one. Get a win. Then talk strategy.” |

| “Organizations adopting artificial intelligence must prioritize ethical considerations, data security, and transparency to ensure responsible innovation and long-term success.” | “If you ship without thinking about ethics, someone else is going to think about it for you… in court.” |

🤖 AI Created Content Pattern #2 [Overuse of certain words, phrases and sentences.]

You are not imagining things. AI-generated content often reads like a term paper or 1800’s era prose because that’s exactly what it was trained on! Not only is the most dry, scientific and complex type of content included in the training data; these data points also rank highest of value and most trustworthy to replicate to the LLM.

Imagine a ranking system D-tier to S-tier for content to train your AI.

Formal writing and everything in the ‘public domain’ (Think ‘library of Congress level’) is scored S-tier. To the LLM, there is no ‘better’ representation of human creation than the ‘public domain’.

At the bottom of the list is ‘social content’ and the type of thing you would read on a minute-to-minute basis in the ‘real world’. This user and formal disconnect is currently fueling a lot of ‘em-dash’ conversations.

The core issue comes from public domain laws requiring two checkpoints in history depending published date of the content and certain conditions. The legal scholars at Stamford[2] can explain it better than I can:

As of 2019, copyright has expired for all works published in the United States before 1924. In other words, if the work was published in the U.S. before January 1, 1924, you are free to use it in the U.S. without permission. These rules and dates apply regardless of whether the work was created by an individual author, a group of authors, or an employee (a work made for hire).

Because of legislation passed in 1998, no new works fell into the public domain between 1998 and 2018 due to expiration. In 2019, works published in 1923 expired. In 2020, works published in 1924 will expire, and so on.

For works published after 1977, if the work was written by a single author, the copyright will not expire until 70 years after the author’s death. If a work was written by several authors and published after 1977, it will not expire until 70 years after the last surviving author dies.[2]

What does this mean for you? The person trying to create human readable content using these models?

Understanding where the models are getting their data will help you control their output.

Once you understand where the system is pulling it’s predictions from, you can then translate into your voice, or your brand’s ethos and guidelines. When you start to understand how the LLM is solving for it’s next prediction, the pattern of output becomes easily seen.

Use the following table to help you start identifying patterns and singular points where you can identify and systematically remove them. The goal is to create a system that doesn’t use these in the first place, but for now, let’s focus on identify and removal.

❌ AI-Speak vs ✅ Human Clarity

| AI Buzzword | Humanized Option | Why it Works |

| Utilize | Use | Simpler, more direct |

| Facilitate | Help | Clearer meaning |

| Leverage | Use/Take advantage of | Less corporate-speak |

| Implement | Set up/Start | More actionable and conversational |

| Optimize | Improve | Optimize has lost meaning in 2025 |

| Comprehensive | Complete/Thorough | Too complicated of a concept |

| Robust | Strong/Reliable | Robust is for smells and flavors |

| Seamless | Smooth | Seamless only makes sense in socks |

| Innovative | New/Creative | Every SAAS is innovative now. |

| Cutting-edge | Latest/Advanced | More grounded, less ephemeral |

| Delve into | Investigate, discover | Modern language dropped this. |

| In the X of Y world | Remove and replace concept | It’s a meme now |

| — (em dash) | Literally any other punctuation | We didn’t know ’em’ dash existed |

| Peering | Looking ahead | It’s used to talk about the future, |

| Unveiling | Revealing, Unboxing, Showing | Passive and no one wears a veil |

| Harnessing | Using | Stunt performers use harnesses |

| Exploring | Remove and replace | Unless about literal exploring, its lazy |

| Enable | Allow / Let | Less abstract and more human |

| Drive | Push / Cause | Cars drive, people don’t |

| Disrupt/Disruptive | New / Different / Novel / Unusual / Atypical | ‘Disruptive’ is startup cosplay |

| Synergy | Working together | Silicon valley -> MLM -> AI. |

| Solution | Fix / Answer | Everything solves something now |

| Ecosystem | Group / Team / Company / Brand | This is not a nature documentary |

| Stakeholders | People / Team / Colleague | Agile developer? No? Stop it |

| Empower | Help / Support | Empowering is an ego word |

| Pivot | Change direction / Switch / Swap | Pivot doesn’t even make sense |

| Deploy | Start / Launch / Roll Out | In the military? No? Stop it |

| Iterate | Try Again / Improve / Test | Are you a SWE? No? Stop it |

Examples: Spotting & Fixing AI-Speak

AI-Generated Sentence (Buzzword Soup):

“In today’s fast-paced digital landscape, leveraging cutting-edge tools is essential to seamlessly optimize your workflow and drive robust outcomes.”

Human Rewrite:

“Online or off, the quality of your tools determines how far you can optimize your workflows..”

Notice the difference between the two? The first one doesn’t actually mean anything.; That statement is a safe and harmless way of judging the quality of the reader’s tool choice, without actually doing the judging. The second one makes a bold statement that is slightly confrontational to the user. It places the onus on them to be able to choose the correct vs incorrect tool and will hopefully allow the reader to continue, aligning the writer as the authority for the recommendation of the ‘correct tool’.

Every word gives the reader a chance to leave. If you let AI write without oversight, you’re just pulling a lever and hoping they stay.

🤖 AI Created Content Pattern #3 [No personal identifying information.]

Remember from pattern 2, the data is trained and highly prefers academic and fact-based content. Personal anecdotes and endearing emotional information don’t survive that editorial process, they are replaced with objective facts or ignored completely. PhD candidates do not include personal anecdotes or pause for emotional connection. Their goal is to convey information in the most forthright and objective way; in direct opposition to how humans communicate on a ‘typical’ level.

We also have to think about data privacy and security in the training data from companies like OpenAI and Google. They have entire squadrons of lawyers making sure they at least skirt the law when scraping the internet for training data.

Humanizing PII is removed on purpose, before training.

| If the LLM could actually identify, say my personal blog as a training source; there would be consequences for the people who trained that data, especially in the EU. At the same time, if my personal blog never mentioned me or my circumstances, it wouldn’t be my personal blog. To circumvent this, all data is anonymized and anything PII is scrubbed, which means any type of personalized or endearing details are deleted before training. This is crucial when trying to get the LLM to mimic human emotion and storytelling. |

Red Flags:

🚩 Absence of unique perspectives, imagine if the ‘mid-Atlantic’ accent was content.

🚩 No personal anecdotes or examples which humanizes the experience.

🚩 Generic advice without context, examples or continuation.

🚩 Zero emotional connection points, reflections or poignant deep thoughts.

The Human Touch Injection:

| Content Type | Make sure you have | Example |

Social Media Content | Personally Identifiable Information | “My wife caught me playing with the dog instead of working…” |

| Business Advice | Personal failure story | “I lost $50K learning this lesson…” |

| How-to Guides | Specific example of how you use a tool | “I use Notion in this way, for this because…” |

| Industry Analysis | Your real contrarian opinion | “Everyone says X, but I’ve found Y…” |

| Product Reviews | Real usage experience | “After 6 months of daily use…” |

🤖 AI Created Content Pattern #4 [ The ‘Overexplainer’ Loop ]

A.I. has access to trillions of words, infinite sentence structures, and every possible way to phrase an idea, yet it still explains everything like it’s talking to a goldfish.

Why? Because it isn’t trying to be clever. It’s trying to be accepted.

So instead of trusting the first clear version of an idea, it repeats, reframes, and rephrases until your brain gives up and says, “Okay, I get it… I think?”

Red Flags

🚩 Repeated logic, slightly changed.

🚩 Over explaining simple concepts.

🚩 Safe and generic triplets, helpful x3.

🚩 Lack of a continued thought.

LLMs play the statistical game of most-likely-to-success. When it comes to explanation, that means trying every common phrasing for a single idea.

A.I. optimize for acceptance, not clarity.

What do you get? The Clarification Spiral.

Example:

🤖 “Helping someone is useful because it helps. Helping is useful in situations where usefulness is important.”

What’s happening here:

The A.I. is only concerned with the answer being accepted, so it bets you’ll give up and just nod by the third repetition. A.I. leans into triplets because it’s a bias the A.I. is programmed to optimize for.

Once the A.I. gets itself into a logic loop that it needs to be helpful it will self define what helpful is, tell you what helpful is and then redefine itself as helpful.

That’s great and all, but you didn’t receive any help, just the triple definition of what help is. It reminds me of the “That depends on what you define the word ‘is’” from the Clinton era. The illusion of depth in repetition tricks you into thinking meaning happened, but nothing did. It’s a loop of noise echoing back a shared need to understand.

How to spot the pattern:

Pattern Breakdown

| Component | What It Looks Like | Why It Happens |

| Triple Redundancy | “Helping someone is useful because it helps. | A.I. is optimizing for comprehension-by-repetition; |

| Circular Definitions | Define ‘helpful’ using ‘helpful’ then loops. | A.I. models reinforce their own logic to feel consistent |

| No Forward Motion | After the loop, there’s no next idea or insight. | The loop feels like progress but actually stalls out. |

| Avoids Risk | Sentences are universally agreeable and vague. | A.I. plays it safe to avoid contradiction or rejection. |

🤖 AI Created Content Pattern #5 [ Transition word addiction ]

A.I. doesn’t actually know how to conceptualize or create. It’s not thinking in arcs or meaning. It’s running probability equations.

The only thing that matters is the probability that the next word or set of words is going to be accepted.

Not whether those words make sense or completes a thought.

Just: do these words fit together with the highest probability.

Read that again.

The model isn’t “aware” of grammar, nor does it care about narrative flow. If it was trained on Reddit threads and corporate emails, that’s what you’ll get. If it was trained on leetspeak and emoji spam, expect exactly that back; finely tuned gibberish with punctuation.

That’s why transitions matter. That’s where you’ll see the seams.

Humans use transitions to: shift tone, breathe, reset the pace, or build momentum. You’ve felt that here already.

I’ve pulled you from education to entertainment and back again. I’ve used images, blocks, lists, and table structure to recalibrate your brain and give your nervous system a break from high-concept load.

A.I. doesn’t do that. It doesn’t know when to slow down or ramp up. It doesn’t understand scenes or beats. It just slides word to word in an endless string of “most likely to follow.”

That’s where the addiction to robotic transition words kicks in.

These words show up like flies to a picnic in A.I. generated content. The A.I. doesn’t understand emotional movement or how to create a transition between ‘thoughts’ so the content always feels like it has jump cuts, not fade dissolves.

So when you read AI-generated content, you might not notice what’s missing at first. But you’ll feel it. That weird limbo where the topic changes, but nothing really moved. No tone shift. No narrative beat. Just new words.

A.I. cannot tell a story, it can only solve for probability.

Human Transitions Hit Different:

“Here’s the thing…”

“But wait!!! there’s more.”

“Plot twist:”

“The reality?”

They’re not just words, they’re handshakes. Signposts. Permission to come along.

A.I. doesn’t give you that. It just keeps typing.

🤖 AI Created Content Pattern #6 [ Stat Salad and Quote Soup ]

AI loves to front-load numbers and quotes like it’s stitching together a TED Talk from BuzzFeed quiz scraps.

“Studies show 68% of businesses using AI report increased productivity.”

“Experts agree this method is 40% more effective.”

“According to Harvard Business Review…”

Cool. Which study? Which businesses? What even counts as “productivity”? This isn’t insight—it’s stat cosplay.

Why This Fails:

- It’s generic – You’ve read this exact phrasing a hundred times.

- It’s unverifiable – No source, no date, no credibility.

- It’s lazy credibility – Sounds smart, says nothing.

These are just filler numbers meant to feign depth. They’re the verbal equivalent of instant mashed potatoes: smooth, fast, and nutritionally void.

The AI Can’t Even Know This Stuff

By design, the model has been stripped of personal identifying info.

It’s not trained on specific verticals.

It’s not up to date.

And most importantly: it doesn’t pull from raw data.

There’s no query. No index. No search. Just probabilistic guessing.

Ask: how many hairdressers increased revenue after switching to online booking?

It’ll give you a percentage.

But that number? It’s a hallucinated average of other hallucinated averages.

Even when trained on spreadsheets or databases, the model doesn’t understand math. It doesn’t “calculate.” It predicts based on word proximity and pattern confidence.

When “78% growth” is most likely to come after “new strategy.” it goes with that.

Whether it’s true? Not its problem.

This is a subtle but deadly distinction. Humans trust numbers. They carry weight. But these aren’t your numbers. They aren’t real numbers. They’re vibes dressed as data.

Human Rewrite Strategy:

Ditch the generic stat. Anchor it to a moment that actually happened.

“Studies show 60% of teams improve after switching to async tools.”

vs

“After switching to async updates, Mike’s team at BlockSync got 9 hours a week back and shipped 3 weeks faster.”

Adding in personal and specific details humanizes the content and is the single largest remover of doubt in AI generated content. If you alter one pattern only (bad idea), this is the one to focus on. Personalized, specific stats are sticky and build trust.

📌 When You Do Use Stats:

- Cite the damn source – Link it or lose trust.

- Contextualize it – Why should I care about this number?

- Add a story – Stats are scale and facts. Stories are how to connect. Use both.

⚠️ TL;DR: If it reads like a LinkedIn post ghostwritten by a toaster, it probably was.

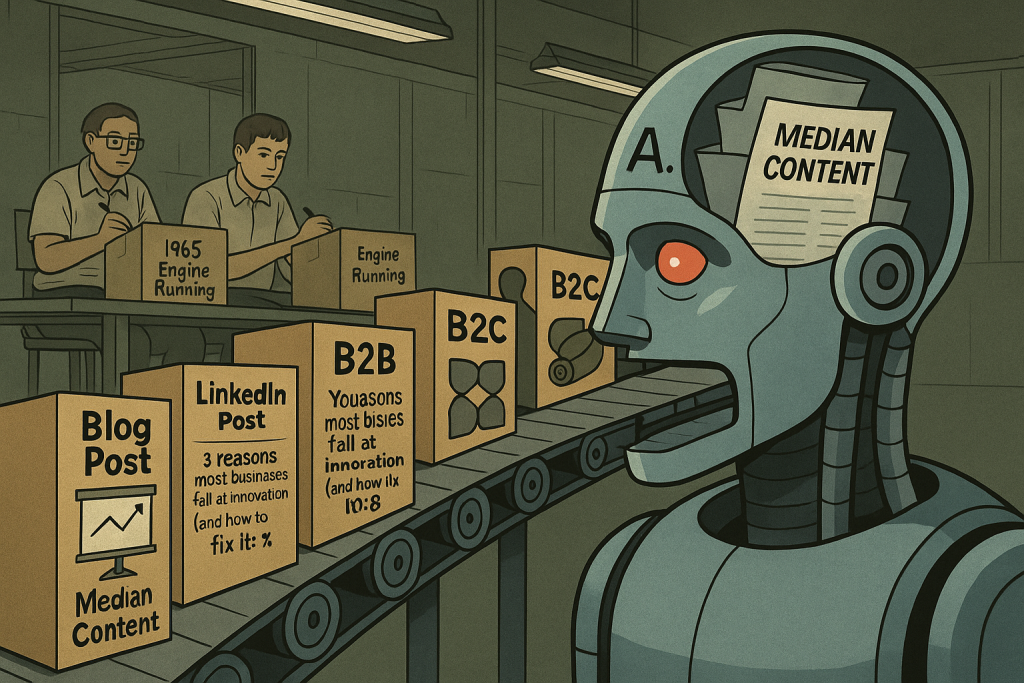

Industry Specific AI Content Patterns:

We have established that A.I. generated content has seams and patterns, but most people still don’t get why it feels off. It isn’t only what is fed to the model; it’s how the data was labeled when it was being trained.

Say “write a blog post” and the model gives you this:

“In today’s fast-paced business world, staying ahead of the curve requires innovation, adaptability, and strategic thinking.”

Now “Write a LinkedIn post” with the same topic.

“3 reasons most businesses fail at innovation (and how to fix it): 🧵”

Same idea. Completely different delivery. The tone shifts, the structure warps, the entire voice is re-skinned.

One’s pretending to be helpful. The other is pretending to be human.

And it gets worse in B2B and B2C.

You prompt with “B2B” and watch it default to:

“Unlock scalable solutions by leveraging cross-functional synergies.”

Or you say “B2C,” and suddenly you’re in 2007 BuzzFeed hell:

“You’ll never believe how easy it is to save money with this one trick.”

This is not intelligence. It’s compliance to tone archetypes. It’s pattern-matching dressed up like strategy.

The LLM isn’t ‘writing.’ It’s performing the role of content.

And the more specific your industry, the tighter that role becomes.

- In SaaS, every post is about scale, churn, and vague ‘growth hacks’.

- In Health, it’s all soft language, fake empathy, and recycled wellness tips.

- In Finance, it’s a stiff authority with zero edge, numbers without teeth.

It sounds right because you’ve been trained to think that’s what authority looks like.

But it’s empty. It’s puppetry. It’s what a statistical “median” of your industry represents in content, and no one wants the median, do they?

The reason behind these tone shifts is very technical and outside the realm of this guide, but basically it’s because the system needs to identify content ‘somehow’ and human labels are the best we have so far. The problem with human labeling is we are lazy and it’s incredibly boring, plus the people doing the labeling are in low cost of living countries without fair wage protection.

What does this all mean? The output is slop because the labels are loose. If you were to look at, say, AudioLDM2 which is a model meant to build audio from text, its trained on 57,000 youtube videos sound only, BUT they label a 1965 v8 corvette and a 1996 i4 honda civic both as ‘engine running’.

Do you think a 1965 corvette and a 1996 honda civic sound the same? To the LLM, they are identical to a human because they were labeled as such in the training process.

We get AI slop out of the model because it was trained that way, it’s that simple really.

Future-Proofing Your AI Content

AI content isn’t coming. It’s here. And if your brand isn’t using it yet, you will be, or you’ll fall behind.

That’s not doomer talk. It’s just math. But using AI doesn’t mean sacrificing your voice. It means learning how to bend the machine to your tone, not the other way around.

This breakdown was step one: spot the seams. Step two? Learn to stitch it like a human would.

I built a framework for that. It’s simple, it works, and it turns generic AI slop into brand-consistent, trust-building content.

Want to work with Shawn? Have a local AI problem you want to figure out? Book a Free discovery call

👉 If you made it this far, you’re ready. Let’s humanize your AI.

Click here to read “HUMANIZE Your AI Content”

Sources:

[1] grammarly.com ai detector | Quillbot ai detector | GPTZERO ai detector

[2] https://www.mckinsey.com/capabilities/quantumblack/our-insights/seizing-the-agentic-ai-advantage

[4] https://automatetowin.com/products/contentBuddy

[5] https://fairuse.stanford.edu/overview/public-domain/welcome/

[6] https://en.wikipedia.org/wiki/Rule_of_three_(writing)

[7] https://news.uoregon.edu/content/study-grouping-things-categories-can-bias-perceptions

[8] https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0056000

[9] https://cmr.berkeley.edu/2022/01/the-reputational-risks-of-ai/